Why does Big Data Science Depend on Skilled Data Engineers?

Big Data has, without a doubt, become the standard technology in all high-performing sectors. Leading companies now rely their decision-making abilities on insights generated from big data analysis. The concept that Big Data may provide you an advantage over your competition is true for both businesses and analytics experts. For people who enjoy working with statistics and are passionate about uncovering patterns in rows of unstructured data, big data opens up a world of possibilities.

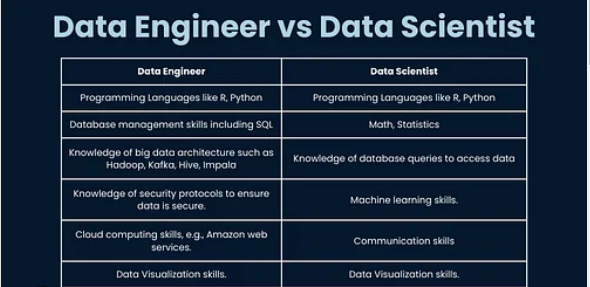

As the discipline of data science advances, a new specialty called data engineering is emerging. Data engineers have a higher value than data scientists, according to tech titans like Facebook, Amazon, and Google. That’s why they’re looking for someone who can create crucial infrastructures such as data pipelines and warehouses. You can take up a big data engineer certification course to build your career in this field.

What is big data engineering?

A Big Data Engineer is a person who is in charge of the design and development of data pipelines. They’re the ones that collect data from diverse sources and organize it into sets for analysts and data scientists to work with. Big Data Engineers are in high demand, and rightfully so. The production of data has risen all across the world. But it’s useless until it’s processed and evaluated properly. Big data is processed to extract useful information, which enhances overall performance. Organizations may improve their business choices, products, and marketing effectiveness by doing so. Professionals in the field of Big Data can help with this.

A Big Data Engineer is one of the greatest employment roles in this sector. Professionals who create, manage, test, and assess a company’s Big Data infrastructure are known as Big Data Engineers. They experiment with Big Data and use it for the benefit and growth of the company.

The three Vs of big data, i.e. variety, volume, and velocity, characterize big data.

Volume: Big data is used to handle large amounts of unstructured, low-density data. The information can be of uncertain significance and come from several places, including social media, corporate sanctions, and sensor and machine data.

Velocity: The rate at which data is received from the sources is referred to as velocity. Rather than being written to disc, the maximum velocity of data is usually streamed straight into the machine’s memory.

Variety: Variety refers to the many data kinds that are available. Unlike traditional kinds of data, which are neatly structured and can be assembled into a relational database, big data is typically in unstructured form.

How to become a big data engineer?

Big data engineer positions are in great demand, and big data engineers have considerable technical expertise and years of experience. A Bachelor’s degree in computer science, software engineering, mathematics, or another IT degree is required to work as a big data engineer. A big data engineer needs a variety of technical skills and expertise in addition to a degree to be successful in their position. So, whether it’s SQL, Python, or several cloud platforms, an ambitious big data engineer may thrive with the correct education. Education, job experience, and optional certifications are all part of the professional road to becoming a big data engineer. Engineers can improve their skills and knowledge throughout the journey, thereby increasing their chances of being employed.

- Education: Developing an interest in computer science, math, physics, statistics, or computer engineering is the first step toward becoming a big data engineer. These topics are often presented in high school and then further developed in undergraduate and postgraduate studies.

- Experiential Learning: Even when pursuing an advanced degree, gaining job experience may help students acquire the skills required of a big data engineer: communication, problem-solving, analytical skills, critical thinking, logical reasoning, and attention to detail.

- Accreditation (Optional): The following professional qualifications are available to big data scientists:

- Cloudera Certified Professional (CCP) Data Engineer: Data analysis, workflow development, data ingestions, data staging and storage, and transformation are all competencies that Cloudera qualifies as experts in.

- Certified Big Data Professional (CBDP): The CBDP certification focuses on assessing data science and data business intelligence competency. This certification was created by the Institute for Certification of Computing Professionals, and the cost varies depending on the level of the test.

- Google Cloud Certified Professional Data Engineer: The Google Cloud certification assesses your abilities to create data structures, develop data systems, and analyze and design for machine learning, security, and compliance.

Importance of data engineering

The majority of businesses have undergone a digital transformation in the recent decade. This has resulted in inconceivable numbers of new sorts of data, as well as considerably more complex data being created at a greater rate. While it was obvious that Data Scientists would be required to make sense of it all, it was less obvious that someone would be required to organize and maintain the quality, security, and availability of this data for the Data Scientists to do their jobs.

Data Scientists were frequently required to develop the necessary infrastructure and data pipelines to accomplish their work in the early days of big data analytics. This was not necessarily part of their skill set or work requirements.

Data Engineering improves the efficiency of data science. If no such sector exists, we will have to devote more time to data analysis to answer difficult business challenges. As a result, Data Engineering necessitates a thorough grasp of technology, tools, and the reliable execution of big datasets.

Data Engineering’s purpose is to offer an orderly, uniform data flow that enables data-driven models like machine learning models and data analysis. The data flow described above can pass via numerous companies and teams. We utilize the data pipeline approach to achieve the data flow. It is a system that consists of several separate programs that perform various actions on stored data.

Skills to invest in Big Data Space

Apache Hadoop: Over the last few years, Apache Hadoop has grown tremendously. Recruiters are now looking for components like HDFS, Pig, MapReduce, HBase, and Hive. Although Hadoop is over a decade old, many software businesses still rely on its clusters because of its ability to give properly mapped results.

NoSQL: Traditional SQL databases such as Oracle, DB2, and others are increasingly being replaced by NoSQL databases such as MongoDB and Couchbase. This is because NoSQL databases are better suited to handling and storing large amounts of data.

Apache Spark: Working with real-time processing frameworks like Apache Spark is the next talent you’ll need. As a Big Data Engineer, you’ll be working with massive amounts of data, which necessitates the adoption of an analytics engine like Spark, which can handle batch and real-time processing.

Data mining and modeling: Experience with data mining, data wrangling, and data modeling approaches is the last skill needed. Preprocessing and cleaning data using various methods, finding undiscovered trends and patterns in the data, and preparing it for analysis are all phases in data mining and data wrangling.

Data engineers gather useful information. They convert and transport the data into “pipelines” for the Data Science team. Depending on the task, they might employ programming languages like Java, Scala, C++, or Python. Data Scientists are responsible for analyzing, testing, aggregating, optimizing, and presenting data to the firm.