How the Central Processing Unit (CPU) Actually Works in Practice

Do you really have any idea what your computer is doing when you use it? If you’re like most people on planet earth, the answer is a resounding “no.”

The way a computer works is just as interesting as the things that pop up on its screen, and knowing how things operate can help you in a number of ways. Understanding computers helps you to make smarter purchases, save money on repairs, and appreciate the devices you use every day.

We’re going to look at the central processing unit today, examining how it works. We won’t go too far into the technicalities, but you’ll get a solid idea of how your CPU works.

Let’s get started.

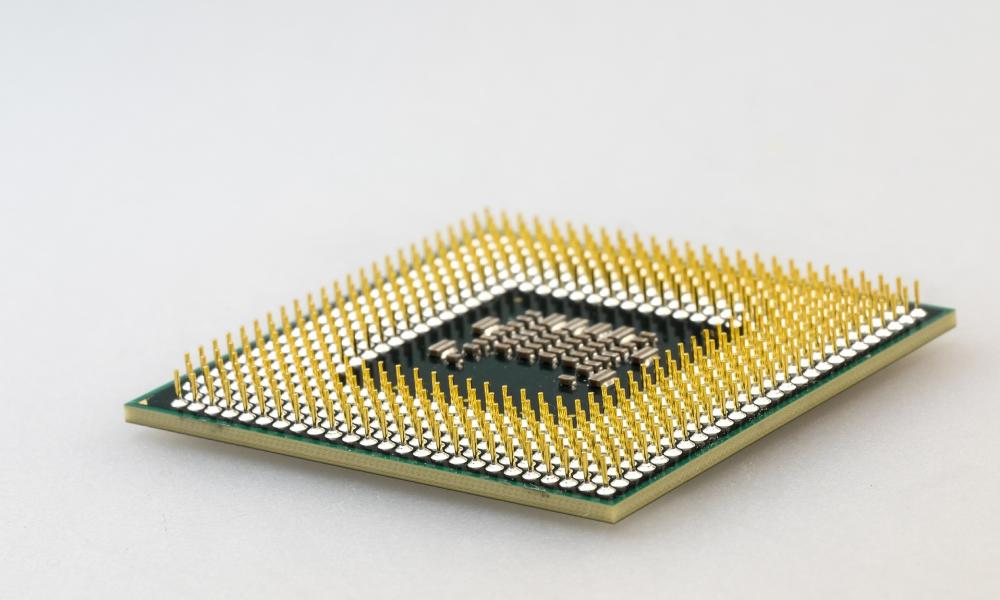

What Is a Central Processing Unit?

A central processing unit (CPU) is considered the brain of the device. It’s the starting point for the various functions of your computer, and it gives the rest of your computer the instructions needed to carry out all of the functions that it needs to.

Every device has some version of a CPU inside of it. Whether it’s a little toy or the most powerful supercomputer on earth, there’s a central processing unit somewhere inside. In the case of computers, the CPU directs information that comes from hardware, software, and various forms of memory.

It’s also responsible for interpreting those signals and producing the corresponding images on the screen. You might see the CPU referred to as the processor, microprocessor, computer processor, or central processor. All of those names refer to the same thing.

So, the CPU is like the quarterback or conductor of the computer. It’s there to orchestrate all of the operations that the computer is summoned to do. But how does it do that, and what gives it the ability to manage so much information?

Transistors and Semiconductors

All of the technological splendor we’re exposed to comes as a result of electrical signals. Tiny signals sent through mechanical mazes provide us with the ability to stream movies, write a thesis, develop code, or engage with virtual reality.

Signals are channeled through a very complex network of what are called transistors. Think of transistors as little nodes, all of which serve as waypoints for electrical signals to travel through. Imagine a traffic cop standing in the middle of an intersection.

The cop can tell cars to come through or demand that they halt. In the same way, the transistor tells the signal what to do. In terms of the transistor, its functions are to “amplify”‘ or “switch” the currents that run through them.

In the case of amplification, the transistor uses a small amount of energy to boost either the voltage or the current of the signal. In the case of a switch, the transistor blocks a signal, requiring that it moves in a different direction.

The ability for a material to either send or block a signal classifies it as a semiconductor. A “conductor” is something that allows energy to flow through it instead of halting it. Copper, for example, is a good conductor.

That’s all great, but we’re still just talking about basic physics. How can we take something simple like a transistor and turn it into a paradigm-bending piece of technology?

Microprocessor Chips and Transistor Volume

The microprocessor chip is, essentially, “the CPU.” The chip holds all of the information needed to manage the computer’s CPU. In the early days of computers, that would have meant a chip that had a few dozen transistors.

Now, however, the device you’re looking at probably has billions of transistors inside of it. You read that right. Thanks to machines, we’re able to make transistors that aren’t much bigger than atoms.

Things are expected to keep growing, too. Moore’s Law is an idea that was proposed by one of the founders of Intel Computers. He states that granted that price doesn’t change, the number of microprocessors in devices will double every 18-24 months.

He made that prediction back in 1975, and it has held up fairly well since then. That’s how we go from 10 to 50 billion transistors available in a microchip.

So, now you have the fundamental parts of a central processing unit. There are a number of transistors that work to either boost or switch a signal. Those are then added to a chip referred to as an “integrated circuit.”

The integrated circuit allows all of those transistors to live in the same place, on the same line. Through that circuit, the CPU manages and directs information.

The result is something like the Intel Evo Processor.

What’s the process for the CPU to manage information, though? All we’ve learned so far is that there are billions of little nodes for electricity to travel through. That doesn’t give us much information on why or how things work.

Fetch, Decode, Execute

There’s a cycle involved in most, if not all of the functions that your computer goes through. If you were looking for something exciting, though, you’re a little out of luck.

The fetch-execute cycle is the way in which your computer draws on information and puts it into action. As an easy way to imagine how this works, let’s think about the CPU of a digital clock.

The clock’s job is relatively easy. It has to ensure that it counts up. In order to do this, it needs to fetch, decode, and execute commands from its CPU. How does it do that?

Fetch Stage

Let’s stay with the clock example, as it’s a simple and understandable process.

The clock starts at zero. The CPU “fetches” the command for the clock when it’s at zero. To do that, it reaches into the data storage included in the device. The message from storage gets sent, and the CPU has the particular code for that value.

At that point, the CPU’s focus is on the value of zero.

Decode Stage

Once the zero value gets loaded into the CPU, it’s sent into what’s referred to as the “instruction register.”

At this point, the task is to “decode” the instructions included in the value of zero. It deconstructs the message then loads it into what’s referred to as the “accumulator.

So, for example, the instructions included in the code for zero might be “tick forward one second.” That command is what the accumulator needs in order to move on to the next step.

Execute Stage

The instruction register uploads a particular value from the decoded instructions of the initial value. The CPU uses the value to move to action and complete the task at hand.

In this case, that task is to move the clock along by one second. At the same time, the new value uploaded into the accumulator cycles back to the first step of the process, which is the value in the program counter.

That all might seem a little bit complicated, but it’s simple in the case of a clock. The first value is zero, that message gets decoded and the resulting value in the accumulator is one.

The accumulator value populates in the initial section. So, the result is that the clock CPU has to find the instructions for the value of “1.” The process continues, and the next value to enter the equation is “2.”

In a linear process like that of a clock, the cycle continues ad infinitum, returning to the initial value every 24 hours.

The Process in Complex Computers

Obviously, we’re not talking about the future of clocks in this article. As things get more complicated, different functions get added into that fetch-execute cycle.

In complex clusters, particular cycles get assigned different values, and those cycles factor in as particular pieces of a larger cycle. Work enough of those cycles into one another in the form of a computer language, and you get entertaining video games like Pong, Prince of Persia, and primitive Mario games.

Those games, though, are written and coded by human beings. The interworkings of those things involve a lot of opportunity for error, which can lead to the loss of personal information and security breaches.

All of those processes of values, functions, and executions have long been pressed into languages that are simpler for people to work with.

So, the language is written for particular CPUs and integrated into them. That compresses a lot of information for human beings and allows us to write complex code without getting carpal tunnel or developing a severe hunch.

Still, though, the process described above doesn’t account for the miraculous level of technology in your cell phones and laptops. The missing factors are speed and scale.

Multiply that process by a million times and speed it up by orders of magnitude. The result is a series of little cycles that command a device to compress videos, play video games, communicate across the globe, and more.

Want to Learn More About Computer Performance?

Hopefully, the ideas above gave you a better idea about the way a central processing unit works. It’s difficult to wrap your head around the scale that these things work on, but the basic principles are digestible.

We’re here to help you out with more information on modern technology. Explore our site for more ideas on the computing industry, getting a brand new laptop, and much more.